algorithm – by Alex Munoz

PageRank is an integral part of Google’s search engine horsepower, but the mathematics behind it can describe the properties of general directed networks by weighing the importance of singular nodes. Read More ›

PageRank is an integral part of Google’s search engine horsepower, but the mathematics behind it can describe the properties of general directed networks by weighing the importance of singular nodes. Read More ›

algorithm – by Xiongjie Yu

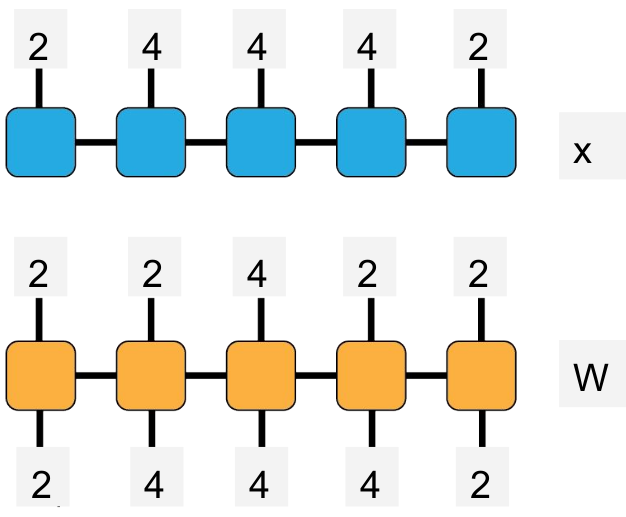

Redundancy in the weight matrix of a neural network can be much reduced with a tensor network formulation. More than 99% compression rate can be achieved while maintaing accuracy. Tensor train representation of a neural network is compact. This formalism may allow neural networks to be trained on mobile devices. Read More ›

Redundancy in the weight matrix of a neural network can be much reduced with a tensor network formulation. More than 99% compression rate can be achieved while maintaing accuracy. Tensor train representation of a neural network is compact. This formalism may allow neural networks to be trained on mobile devices. Read More ›

algorithm – by Brian Busemeyer

Simulated annealing is a method for optimizing noisy, high-dimensional objective functions using ideas from materials science. Read More ›

Simulated annealing is a method for optimizing noisy, high-dimensional objective functions using ideas from materials science. Read More ›

algorithm – by Benjamin Villalonga Correa

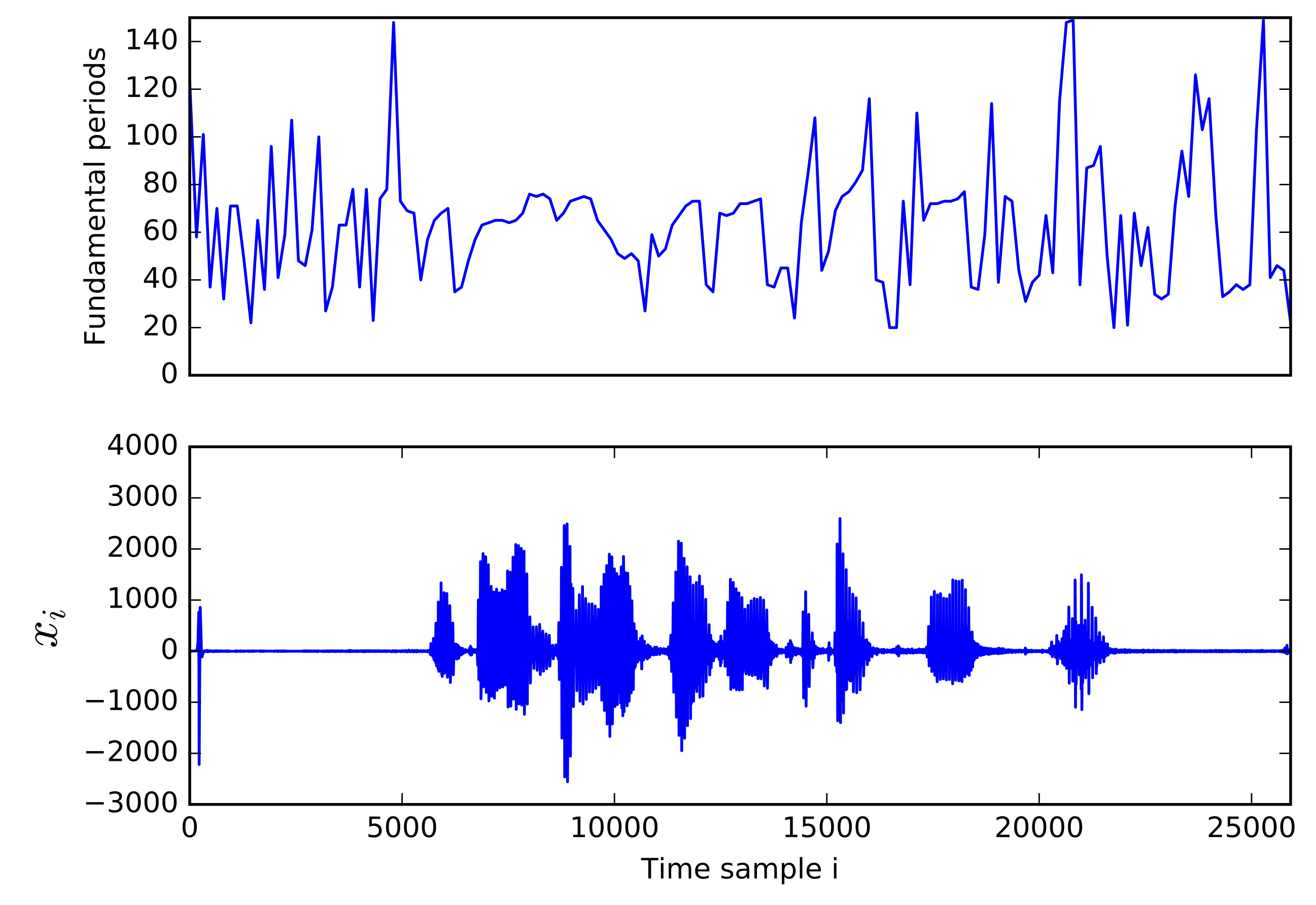

Problems in audio compression form just a subset of the general problem of signal compression, and general techniques can well be applied to solve them. However, it is possible to benefit greatly from being aware of the very particular way in which the human brain perceives and interprets sound, being able to optimize compression techniques to keep only information that is relevant to human perception. In this presentation, I focus on speech compression, and more particularly on an implementation using a Linear Predicting Model (LPM). The LPM provides a very efficient way of reconstructing a signal from a very small set of compressed data (up to 95% of data can be neglected), generating a sythesized speech that keeps the original phonemes and the quality of the voice of the speaker, who can be recognized easily. This technique has been used in telephony applications. Read More ›

Problems in audio compression form just a subset of the general problem of signal compression, and general techniques can well be applied to solve them. However, it is possible to benefit greatly from being aware of the very particular way in which the human brain perceives and interprets sound, being able to optimize compression techniques to keep only information that is relevant to human perception. In this presentation, I focus on speech compression, and more particularly on an implementation using a Linear Predicting Model (LPM). The LPM provides a very efficient way of reconstructing a signal from a very small set of compressed data (up to 95% of data can be neglected), generating a sythesized speech that keeps the original phonemes and the quality of the voice of the speaker, who can be recognized easily. This technique has been used in telephony applications. Read More ›

algorithm – by Will Wheeler

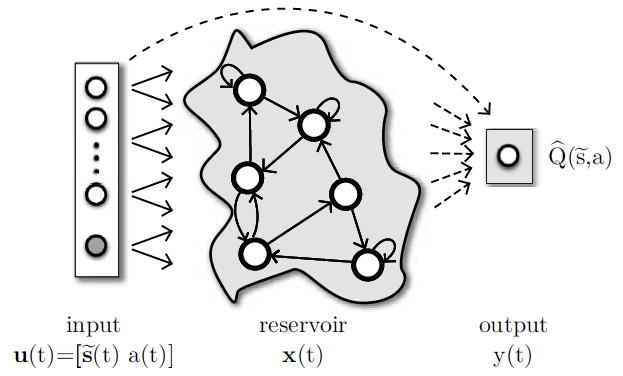

Recognize a million words per second. Read More ›

Recognize a million words per second. Read More ›